Big data is a collection of large datasets that cannot be processed using traditional computing techniques. It is not a single technique or a tool it involves various tools, technqiues and frameworks.

some of the fields that come under the umbrella of Big Data.

Black Box Data − It is a component of helicopter, airplanes, and jets, etc. It captures voices of the flight crew, recordings of microphones and earphones, and the performance information of the aircraft.

Social Media Data − Social media such as Facebook and Twitter hold information and the views posted by millions of people across the globe.

Stock Exchange Data − The stock exchange data holds information about the ‘buy’ and ‘sell’ decisions made on a share of different companies made by the customers.

Power Grid Data − The power grid data holds information consumed by a particular node with respect to a base station.

Transport Data − Transport data includes model, capacity, distance and availability of a vehicle.

Search Engine Data --Search engines retrieve lots of data from different databases

The data in it will be of three types.

Structured data − Relational data.

Semi Structured data − XML data.

Unstructured data − Word, PDF, Text, Media Logs

Traditional(old) approach, an enterprise will have a computer to store and process big data. For storage purposewe have to use DBlike Oracle, IBM, etc. But,In this approach works fine with those applications that process less volume of data . But when it comes to dealing with huge amounts of data, it is a hectic task to process such data through a single database.

user <-->system<-->DB

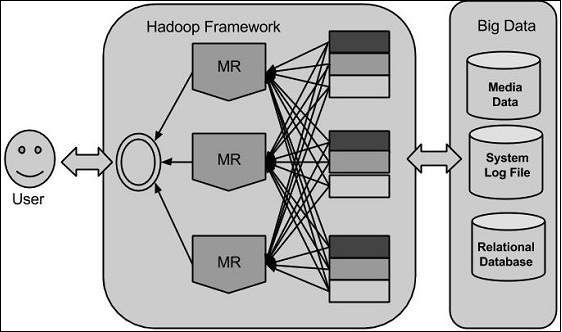

Using the solution provided by Google, Doug Cutting and his team developed an Open Source Project called HADOOP.

Hadoop runs applications using the MapReduce algorithm, where the data is processed in parallel with others. In short, Hadoop is used to develop applications that could perform complete statistical analysis on huge amounts of data.